Source: Ken Acquah on Twitter

Source: Ken Acquah on Twitter

Several weeks ago, I wrote a blog post arguing that my old FAANG job was going to be obsolete by the end of 2025, as a result of rapid progress in the breadth of AI capabilities.

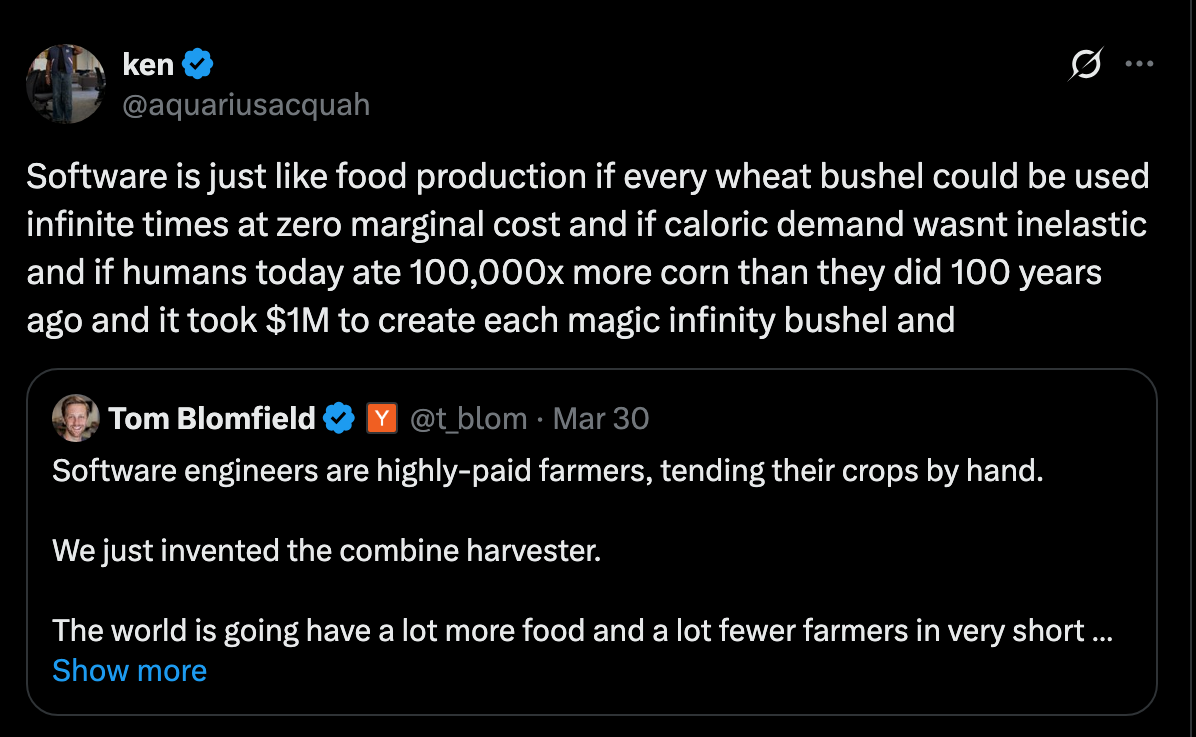

The most common counterargumentnot ad hominem I heard went something like this:

This is a restatement of Jevons' Paradox: if the demand for a good is constrained primarily by its price, then a decrease in the price of that good will increase overall demand for that good dramatically. This is often true of industrial inputs because of their nonlinear nature: cheaper coal during the Industrial Revolution enabled greater use of coal overall.

However, I don't think the analogy holds. In order for AI dev tools to be a complementary good to your labor, you must sit at least partially upstream or downstream of their capabilities - and being downstream of their capabilities today does not mean you'll be downstream of their capabilities tomorrow. Unless your labor is extremely differentiated, AI dev tools are more likely to be a direct substitute to it than a complement.

Historically, the market for software development has behaved as though it were highly price elasticIn economics, the price elasticity of demand refers to the change in consumption with respect to the change in price. A good is price elastic if the price of a good halving leads to the amount consumed more than doubling.. There are several reasons to think this may continue to be the case:

I buy all of these arguments, frankly, and I'm sure that reduction in effective software development costs will expand the market for software considerably. However:

Additionally - these arguments concern whether demand for overall software development capability is elastic. Historically, this quantity is functionally the same as demand for software engineer employment. However, I don't think this is likely to continue to be the case.

In the past, the advent of e.g., assembly → programming languages, low-level programming languages → higher-level programming languages essentially just pushed the level of abstraction up a notch. Software engineers were still needed as intelligence glue to piece everything together - they just operated at a higher level of the stack. The overall gain in productivity from working at a higher level was nowhere near enough to make a dent in demand for engineering capability; thus, Jevons' Paradox applied and still more engineers were hired.

Meet: Intelligence Glue as a Service, also known as AI in 6 months from now. Capabilities? Now:

In 6-12 months:

I envision the "intelligence glue" part of SWE jobs as being squeezed into higher and higher corners of abstraction, until one day it becomes indistinguishable from the inputs to production upstream of engineering implementation (i.e., ideation, strategy, and marketing.)

Instead of coal use, I'd point to a different historical parallel. As recently as 1900, as much as two-thirds of the world's population was in the business of farming. In the 1930s-1950s, the Haber-Bosch process and other industrial farming techniques improved food output per farmer by a factor of perhaps 20x. Demand for food, however, is fundamentally inelastic: every human only needs to eat so much of it. As a result, all those people who previously trudged away farming were able to get other jobs. Today, farming is extremely mechanized and represents about 2% of employment in the developed world, a number which may itself be propped up by subsidies.

This is a wonderful thing for the world economy, and the cost of a whole bunch of important products that rely on software as an industrial input will almost certainly go way down. But I wouldn't advise staying in the business of subsistence farming while your neighbor gets ready to put his combine harvester to use.

The problem is that software engineering employment is correlated not with the returns to running software repeatedly at low marginal cost but with the creation and deployment of new software. The returns on running software go to equity holders; the wage premium commanded by those who write software is purely a function of the scarcity of people (or machines) capable of doing so. What you're really creating as a software engineer is git diffs, not code itself.

The wage premium that engineers can command can probably degrade at least as fast as the market for new software can expand, given that their wage premium degrading is precisely what can drive that market expansion. If this is the case, you probably want to find other value adds sooner rather than later.

The fact of the matter is, humans were NOT EVOLUTIONARILY DESIGNED to do symbolic reasoning, and we happen to have invented a machine that is more or less designed to do just that.

There are lots of things that AI models seem poised to suck at for the foreseeable future! For every math/science/coding benchmark that gets smashed, models remain bad at creative writing and most everything else that involves qualitative emulation. RL'ing a model to death won't make it any better at exploring wide latent spaces in which the space of valid outputs is equally as broad as the space of valid inputs.

But yeah, you should probably graduate from seeing yourself as an unchallenged gatekeeper of a critical industrial process.

Get new posts delivered to your inbox. No spam, just content.